Big Tobacco. Big Tech. Different Era, Same Pattern.

For a long time, smoking was just considered normal.

It was advertised as cool, harmless. Even healthy, strangely enough.

Doctors recommended it. Celebrities sold it. Governments allowed it without regulation.

I still remember flying as a kid, sitting just a few rows away from the smoking section on planes. The smell lingered everywhere. That’s just how it was. No one questioned it much.

Looking back, it’s hard to believe how embedded tobacco was in daily life. But for decades, people genuinely didn’t think it was a big deal — even though, deep down, some part of us probably knew better. And even when the research started coming out, showing the health risks clearly, the industry pushed back hard. Funded doubt. Bought silence. Delayed action.

And behind it all, the product itself was doing exactly what it was designed to do: keep people addicted. Hooked. Dependent.

It’s taken generations to unwind that damage.

And yet, here we are again — with a different product, but the same playbook.

The Digital Addiction We Don’t Like to Admit

I’m talking about Big Tech.

Not just the companies — but the entire system that now surrounds us.

Phones, platforms, apps, feeds, logins.

The way we work, talk, share, remember, even think — increasingly runs through a handful of companies. It’s become normal. Expected. Invisible.

And just like smoking once was, we’ve come to rely on it, enjoy it, even defend it — without really questioning what it’s doing to us.

Do we actually need to enforce big warning labels before clear to us what the real damage is?

Persuasive Technology: the engine behind the hook

What keeps us glued to these platforms isn’t just great design — it’s intentional manipulation.

Most major tech platforms rely on what’s called persuasive technology — the use of scientifically tested psychological techniques to steer user behavior toward a specific outcome, usually increasing engagement, screen time, or ad clicks.

This isn’t a side effect. It’s the design goal.

As the Center for Humane Technology) describes it, this is the “race to the bottom of the brainstem” — bypassing thoughtful choice in favor of reflex and compulsion.

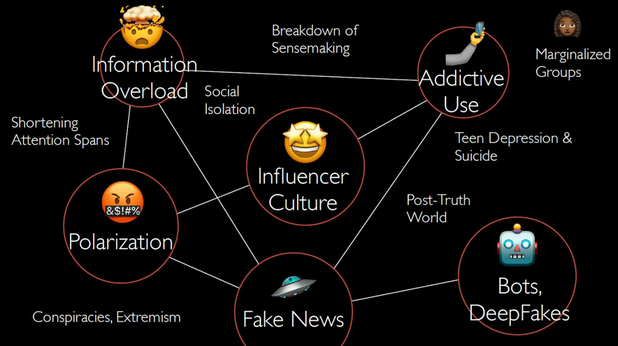

The results are everywhere:

- Shortened attention spans

- Teen depression and anxiety

- Polarization and conspiracy culture

- Information overload

- A breakdown in sensemaking

- Bots, deepfakes, and fake news flooding our feeds

These harms aren’t isolated. They’re interconnected symptoms of systems that reward addiction and distraction over well-being and truth.

“Persuasive tech uses scientifically tested design strategies to manipulate human behaviour” - Image credit: Center for Humane Technology

Its more than just convenience

What makes this harder to talk about is how useful everything seems.

We need our phones. We use these tools to stay in touch, get things done, organize our lives.

But that usefulness is also the trap.

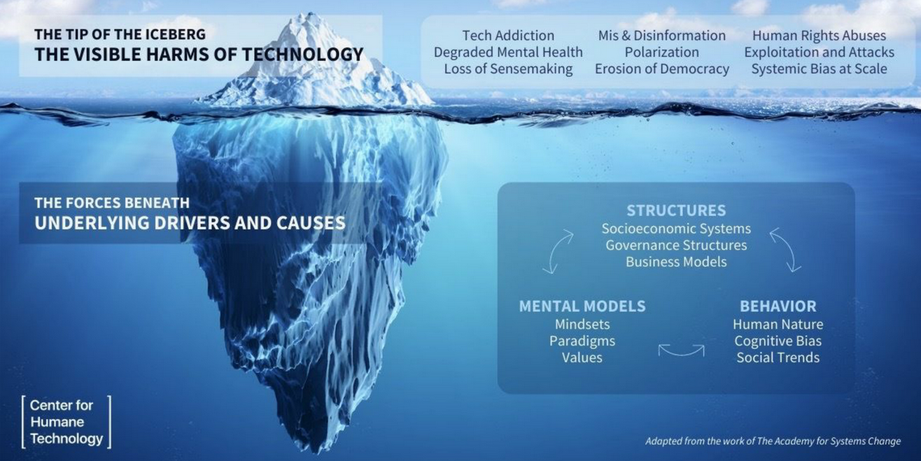

Behind the scenes, it’s not just about features or services — it’s about extraction.

Your data. Your attention. Your time.

Every interaction feeds the machine.

Every click is part of a profile.

Every scroll fine-tunes a system that knows how to keep you scrolling just a little bit longer.

And the damage runs deeper than just what we can see. Like the tip of an iceberg, the visible harms — tech addiction, degraded mental health, erosion of trust and democracy — are just the surface. Beneath them lie structural causes: broken business models, governance failures, and cultural norms that normalize it all.

“Under immense pressure to prioritise engagement & growth, tech platofrms have created a race for human attention that unleashed visible and unvisibile hars to society” - Image credit: Center for Humane Technology

The Extractive Tech paradigm

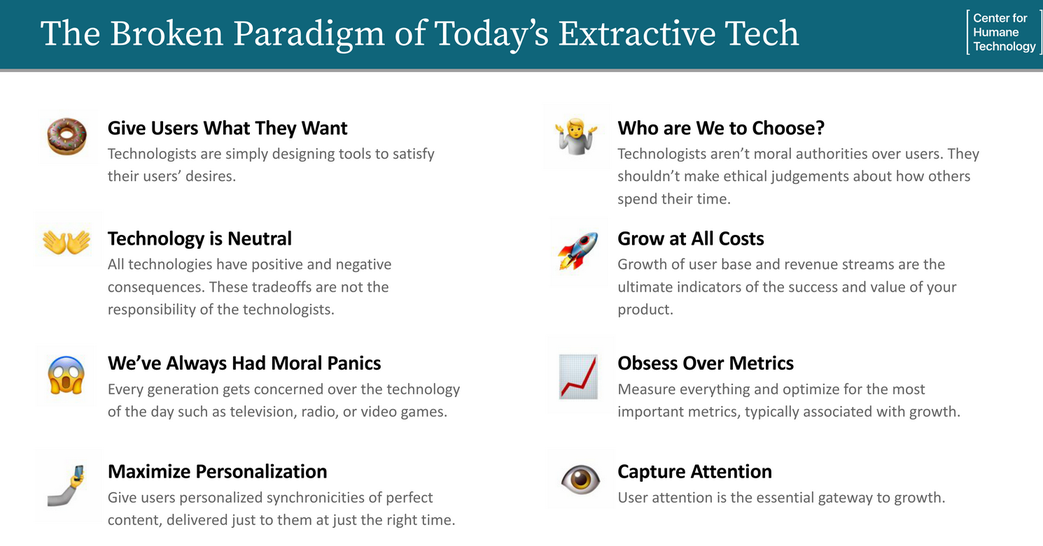

Part of the reason this continues is the way the tech industry frames its role.

According to the Center for Humane Technology, the dominant paradigm of Silicon Valley — the mental model that underpins much of our technology — is deeply flawed.

You’ve probably heard the common lines:

- “We’re just giving users what they want.”

- “Technology is neutral — it’s what people do with it that matters.”

- “It’s just like any other moral panic — people once feared radio and TV too.”

These talking points are used to sidestep accountability and they downplay consequences and avoid ethical responsibility.

But when the products & plaforms are fundamentally designed to capture attention, maximize engagement, and grow at all costs, you don’t get neutrality but a system optimized for addiction and profit, not human betterment.

Image credit: Center for Humane Technology

It’s not just a personal problem. It’s a structural one as well.

When we talk about tech addiction, we often frame it as a personal failing.

Spend less time on your phone. Turn off notifications. Take a break.

But that’s like telling smokers in the 1980s to “just quit” while tobacco ads ran on every billboard and doctors handed out free samples.

The issue isn’t just how we use the tools. It’s how the tools are built — and who they serve.

Big Tech has shaped itself into something far more powerful than just a group of tech companies.

It’s a digital infrastructure.

A cultural habit. A business model that thrives on attention and control — not on health, empowerment, or freedom.

Its hard admitting the dependency

Most of us don’t want to hear this and I get that. It’s uncomfortable. We rely on these systems quite heavily. Many of us make our living through them. And even though there are plenty of alternatives, the path towards them is unclear or seemingly difficult. Even writing this, I’m aware of my own contradictions, as I still use and depend on some Big Tech in daily life.

And that’s exactly the point. These monoloplistic platforms are embeddedd almost everywhere. And that makes it harder to see, harder to challenge and even harder to imagine life outside of it.

But there is hope. There are alternatives - good ones - and there are simple paths towards them. And life, connection and communication most certainly continues and thrives beyond the realms and reaches of these extractive ecosystems.

Just like with tobacco or any other addiction, the shift doesn’t happen all at once.

It starts questioning. Then with noticing and accepting, followed by fair bit of resisting.

And eventually, choosing differently, especially when it’s inconvenient and makes you uneasy, irritated and uncomfortable.

Big Tech may not leave a black stain on your lungs, but it’s shaping our behavior, our our political & worldviews, and our future in ways most of us did not consent to.

And the sooner we collectively acknowledge the grip it has, the sooner we can to loosen it and liberate ourselves.

If you want to learn more or further understand the impacts I recommend watching this old but still relevant documentary. Available for free through Internet Archives:

As well as this TED Talks from Carol Cadwalladr, Investigative Journalist who’s been exposing and challenging Big Tech since 2018.

The Foundation of Humane Technology have some excellent self-paced online courses to help raise awareness as well as build more humane tech.

There’s a lot of good resources as well as alternatives. I might take some time apart and start gathering and compiling a collection of good resources on my site.

Thanks for dropping by and reading!